First of all, a disclaimer: I use this setup just as a secondary backup, I do not recommend you rely on USB drives as storage media for your FreeNAS server.

Overview

I have a few spare 2 TB drives that used to be in my homeserver, and I wanted to connect them to my FreeNAS every few weeks to store some backups on them, then disconnect them and put them in a drawer.

Preparing the USB hard drive

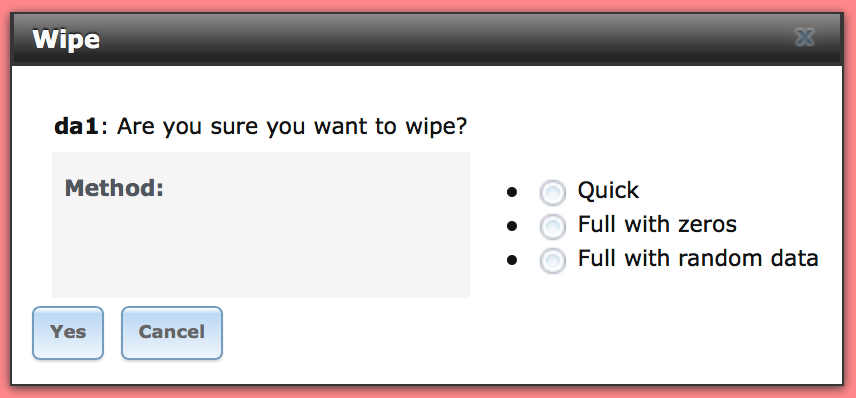

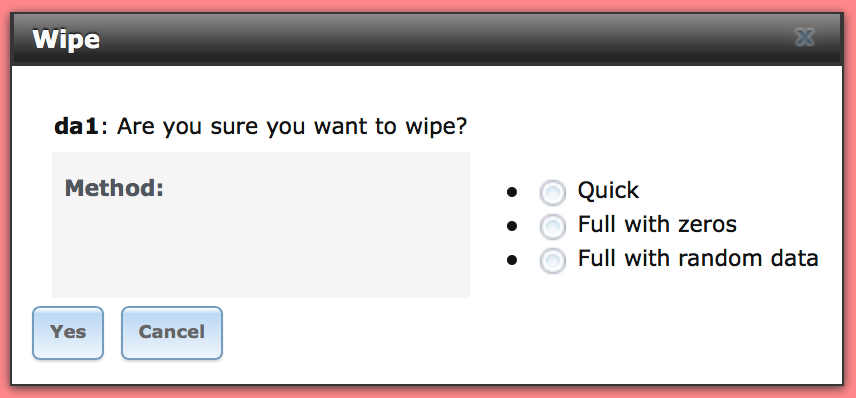

If your drives are not brand new, chances are you already have some data on them, so you’ll need to wipe them first. To do so, connect your drives to FreeNAS, login to the webinterface, go to the Storage tab, click on “View Disks”, select the correct one from the list, then click on the “Wipe” button on the bottom of the screen.

Everything will turn red now. That’s because you’re doing something potentially dangerous. Make sure you really select the correct drive, otherwise you might destroy all of your data.

A Quick wipe is usually enough. Select that and proceed.

Once the wipe is completed, you can turn the disk into a Volume you can use on FreeNAS, just like your main one(s). Head over to the Storage tab, click on the “Volume Manager” button. You will see your newly wiped disk there, give it a volume name, tick the “Encryption” checkbox if you’d like, then click on “Add Volume”. I’ll call mine “ColdStorage” for the purpose of this tutorial.

Do NOT select any “Volume to extend”. That would mean you’d be adding a single (USB!) hard drive striped to your main pool, and that drive would become the single point of failure for all the data in that pool.

After the disk has been initialized, you can start adding datasets, clone other ones, setup network sharing, whatever.

Disconnecting the USB drive

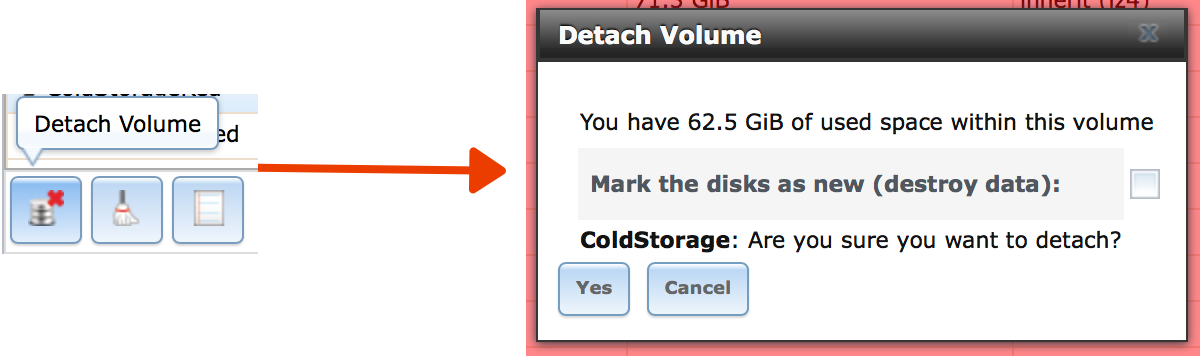

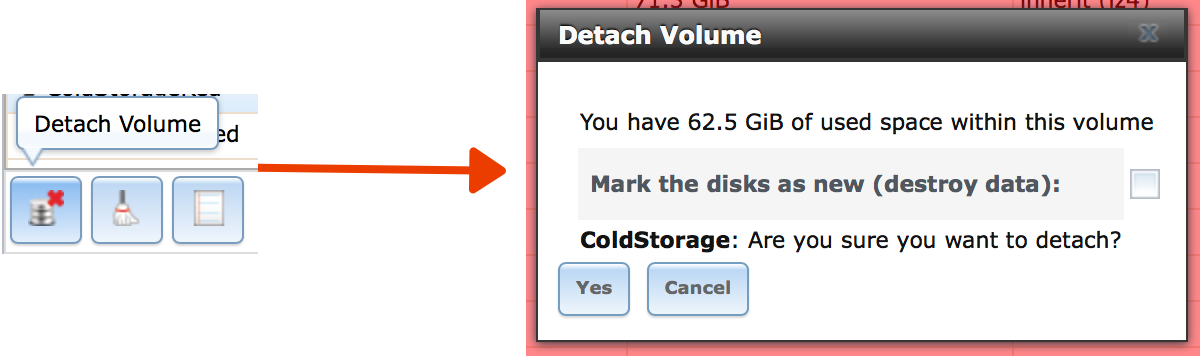

Once you’re done with it, you will want to disconnect your USB drive to store it somewhere. To do so, once again go to the Storage Tab, select your pool from the list (ColdStorage in this example), then click on the “Detach Volume” button. You will only see this button if you select the pool, while if you select the dataset with the same name you will get a different set of buttons.

I’m not sure that I have to point that out, but let’s do it anyway: do NOT select “Mark the disks as new (destroy data)”. Once the pool has been detached, you can disconnect the USB drive.

Reconnecting the USB drive

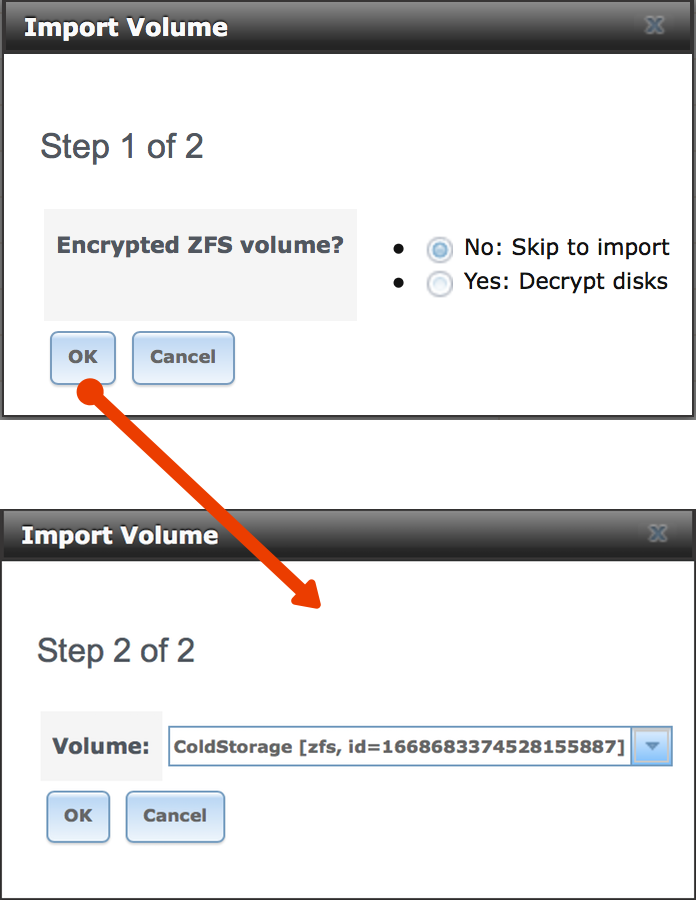

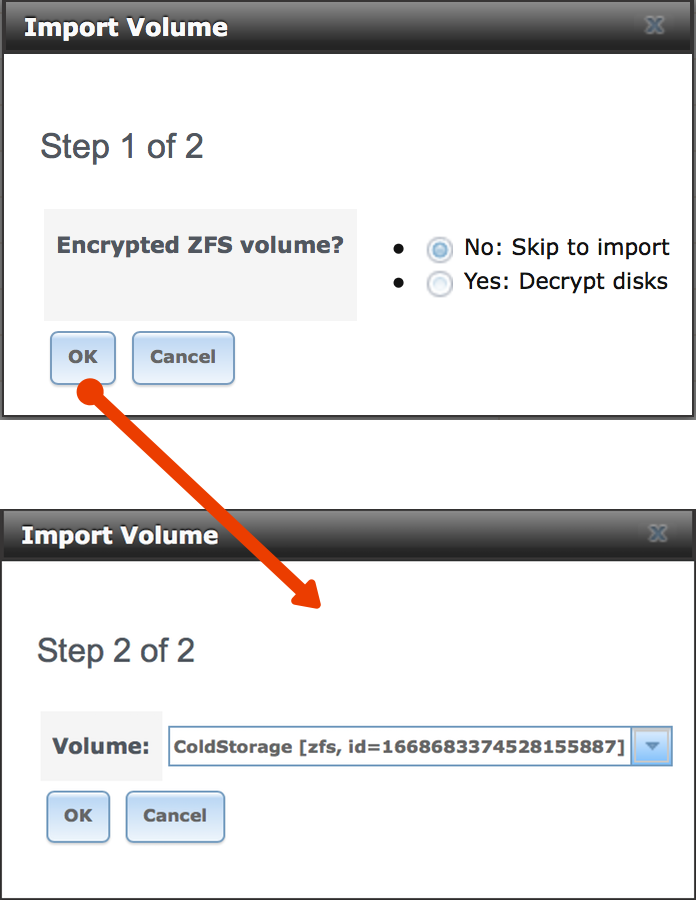

For the thousandth time, go back to your trusty Storage tab, then click on the “Import Volume” button. A box will appear, select wether you have an encrypted volume or not, then click OK. FreeNAS will stop a few seconds to reflect on the good it’s made in its life, then it will present you a dropdown from which you’ll be able to select your USB volume. Click OK and it will be once again available in FreeNAS.