Error: unknown filesystem.

grub rescue>This was the nice error that greeted me when I tried to (re)boot my Proxmox server.

What unknown filesystem? The server was still supposed to boot from the same ZFS disk it always booted from.

TL;DR it was due to the large_dnode getting enabled on a dataset I had manually created. GRUB does not support that feature. Create a new dataset with that feature explicitly disabled, move the data to it, delete the original and rename the new one. Done.

Some background

Being space-constrained in my apartment, my Proxmox server is actually my everything server. It is my virtualization server, my router (it runs pfSense in a VM) and my NAS (it runs Samba in an LXC container).

For NAS duties, I decided a while back to create a dedicated dataset rpool/nas, which I then mounted in the Samba container. Without much thinking, I just ran a simple:

zfs create rpool/nas

Due to ZFS defaults, it had the feature dnodesize set to auto. It was never an issue, until the other day.

What happened

Some file must have triggered a non-legacy (512 bytes) dnode size in the dataset, which meant that GRUB could no longer read the drive.

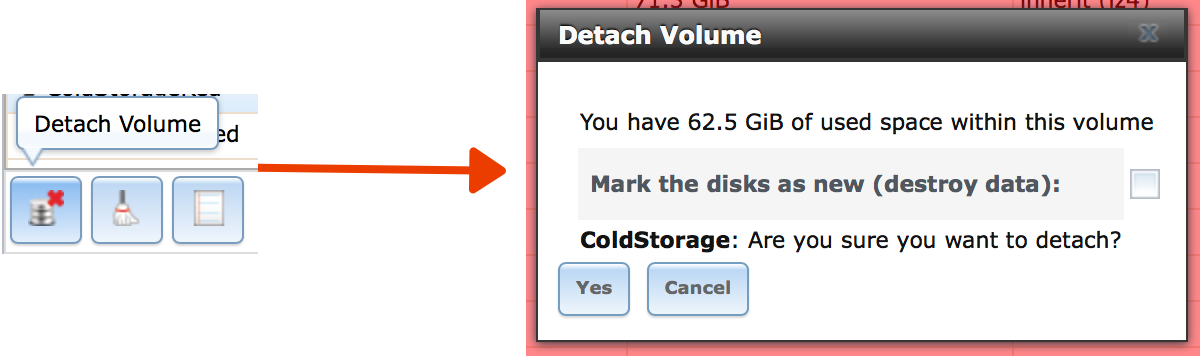

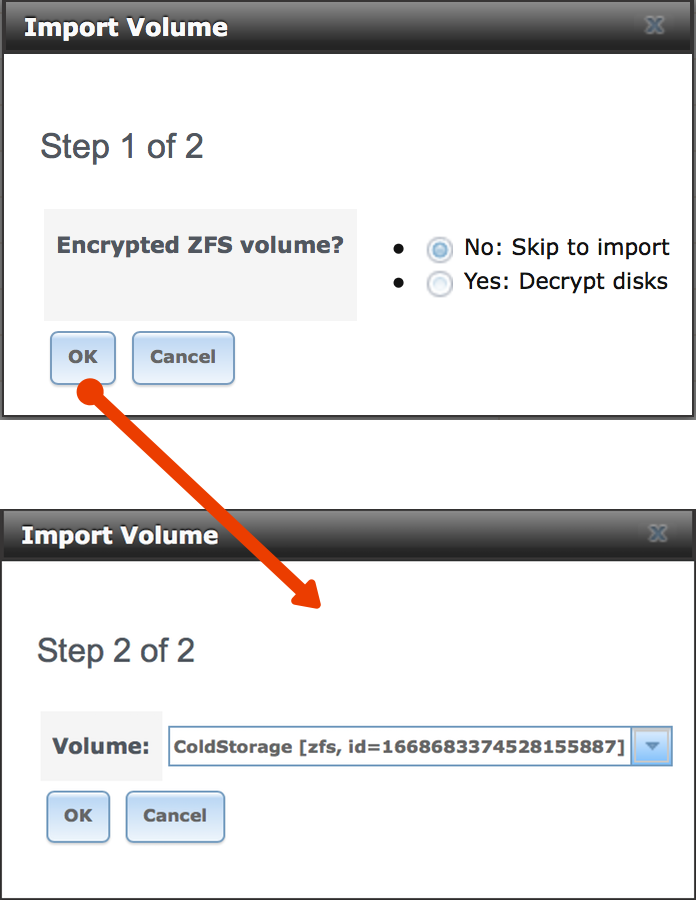

I came to that conclusion by installing Proxmox in a VM on my Mac (with an ext4 boot drive, to avoid having to rename the pool), and attaching the server’s SSD through a SATA-USB adapter.

I changed the mountpoint of my server’s boot partition from / to /recovery, mounted /dev /sys and /proc in there and then chrooted into /recovery:

zfs set mountpoint=/recovery rpool/ROOT/pve-1 mount --rbind /dev /recovery/dev mount --rbind /sys /recovery/sys mount -t proc /proc /recovery/proc chroot /recovery

I ran grub-probe -vvvv / to get some insight on why GRUB was failing and one line was interesting:

grub-core / fs / zfs / zfs.c: 2112: zap: name = org.zfsonlinux: large_dnode, value = 1, cd = 0

I read a bit online and I found out about the dnodesize thing. I usually like to link back to all the places that were useful to find a solution to any problem I blog about, but this time it was just too difficult to keep track of everything, except for this discussion on the german Proxmox forums.

The solution

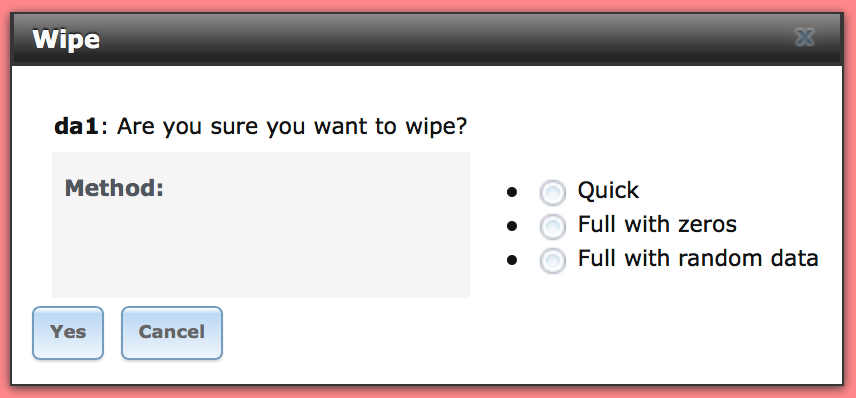

I ran zfs get -r dnodesize rpool to get a sense of what were the different dnodesize values for all the datasets in the pool. They were all set to legacy, except for my rpool/nas dataset.

So I made a new dataset for my NAS data with dnodesize explicitly set to legacy, then I rsync’d everything from the old dataset into the new one, destroyed the old dataset and renamed the new one.

zfs create -o dnodesize=legacy rpool/nas2 rsync -av --progress /rpool/nas/ /rpool/nas2/ zfs destroy rpool/nas zfs rename rpool/nas2 rpool/nas

Last step: moving back the mountpoint for `rpool/ROOT/pve-1`:

zfs set mountpoint=/rpool/ROOT/pve-1

And then I moved the SSD back into my server.

That’s it. It just took an afternoon of cursing.