Random post. Since the warranty void sticker was peeling off anyway, I opened the 36V battery of my Decathlon Btwin Elops 920e ebike and took some pictures. Here they are.

Edit Excel Formulas with AutoHotKey

At work I’m stuck using a Windows PC, and I often need Excel.

I really dislike the automation landscape on Windows (nothing comes even close to what we have on the Mac, there’s no Alfred, Hazel, Keyboard Maestro, Better Touch Tool, just to name a few), and the only thing I found that is useful is AutoHotKey. It is much harder to use than Keyboard Maestro, but it is quite powerful.

Today I had to change the formulas in a many Excel cells to add an “outer” IFERROR, so I created an AutoHotKey macro to help:

#<::

Send {F2}

Send {Home}

Send {Del}

SendInput, =IFERROR(

Send {End}

Send, ,"")

Send {Enter}

Return

The problem is that at the beginning of time Microsoft decided it was best to localize function names, so the English IFERROR becomes the Italian SE.ERRORE, notice the period between the two words.

If I just replaced SendInput, =IFERROR( with SendInput, =SE.ERRORE( I would get SE,ERRORE in my cell (notice the comma), because of regional seettings: we use commas as decimal separators, and periods as thoustand separators (that also brings the funny thing of using semicolons as arguement separator in Excel functions).

The only way I could find to coerce Excel to accept a period and not to translate it to a comma, was to send a unicode string (really). So the final script, for the Italian locale, becomes:

#<::

Send {F2}

Send {Home}

Send {Del}

SendInput, =SE{U+002E}ERRORE(

Send {End}

Send, `;"")

Send {Enter}

Return

Sign a custom Stripe webhook event

Stripe, its docs and its tools are amazing, but today I found a shortcoming: it is not possible to easily send arbitary events to a webhook endpoint while having signature verification enabled.

So, I made this little script to make things easier:

You just need to adjust the webhook URL and secret, then point it to a json file containing the event you wish to send.

Thanks, as always, to a StackOverflow question.

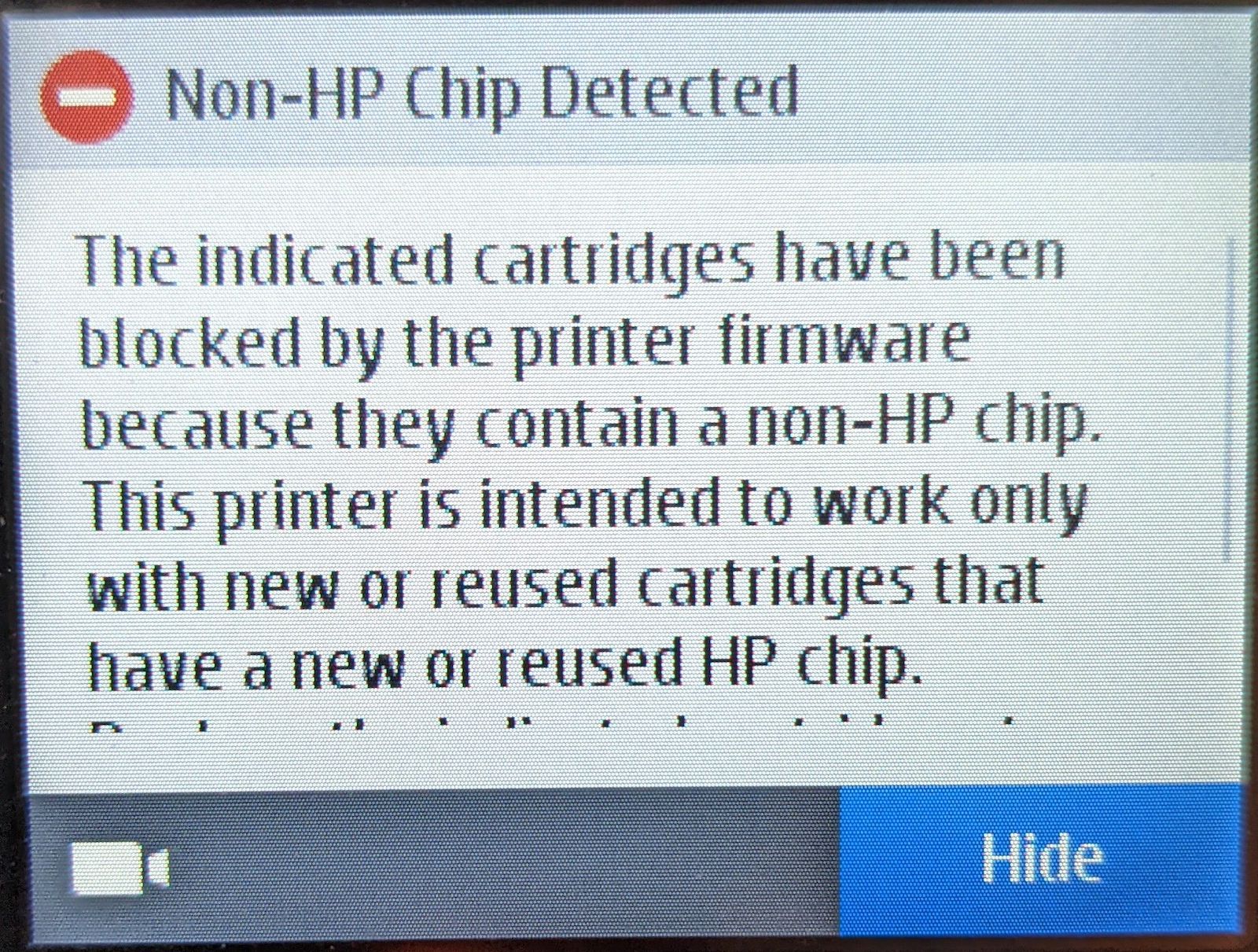

I used all the black ink of my HP PageWide Pro 477dw, so I bought a compatible cartridge and installed it into the printer.

I was greeted with a nice message, courtesy of HP:

The indicated cartridges have been blocked by the printer firmware (MAVEDWPP1N001.2208A.00, released on February 14, 2022) because they contain a non-HP chip.

Not my printer, but the message is the same.

Not my printer, but the message is the same.I dug around and found that the easiest way to solve the issue was to downgrade my printer’s firmware to version 1908C.

Somebody on Reddit helpfully linked to this firmware version’s Windows .exe installer, from which I extracted the actual .ful2 image using 7-Zip.

To install it, copy the .ful2 file in the root of a FAT32 formatted thumbdrive (mine had an MBR partition table, I don’t know if it would work on a GUID one), then connect it to the printer. I left it connected for a while, and initially it only presented me with the option of scanning to the drive or printing something from it. After about a minute it went back to the main menu, from which I re-selected the thumbdrive, and this time it showed a button to update the firmware from the thumbdrive. I pushed it and waited for the update to complete (3–4 minutes total).

Now it works great. (And I disabled auto updates, even though I don’t like that. But I won’t let HP dictate what kind of cartridges I can and cannot use.)

So, to recap:

- Put this .ful2 file in the root folder of a FAT32-formatted thumbdrive (MBR partition table).

- Connect it to the printer and wait for the printer to go back to the main menu.

- Press the thumbdrive button from the main menu and then press the button to install the new firmware from it.

- You’re done. Maybe disable auto updates.

I’m also uploading here version 1921E, should it be helpful in the future.

Post update – Two days later

Even though I disabled auto updates the printer somehow found a way to do it anyway (🤦♂️), and I was back at the error message above.

I repeated the procedure, it worked fine, and this time I completely blocked the printer from being able to access the internet. It appears to be working 💪🏻.

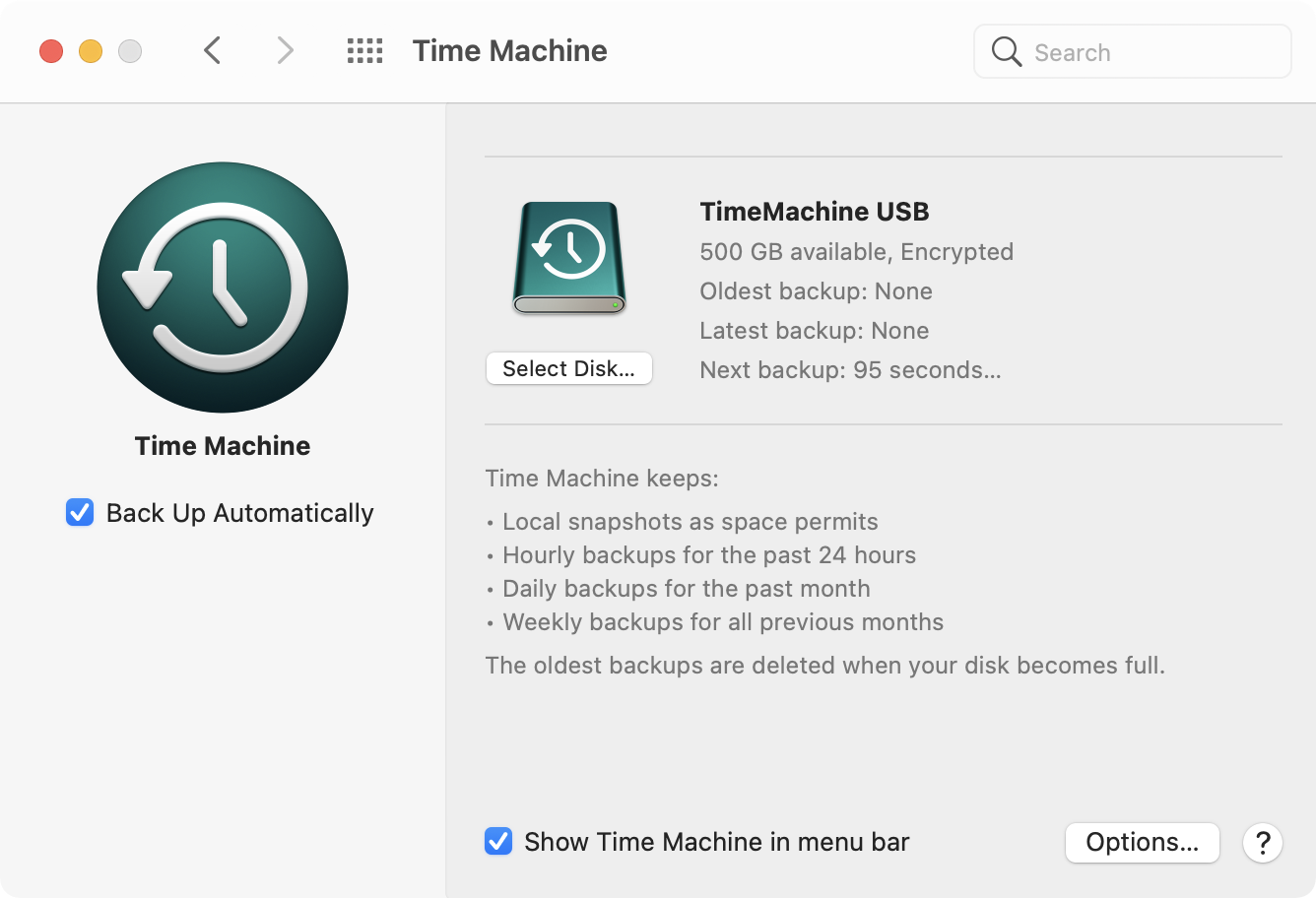

My main computer is a 2020 M1 Mac mini, which is so small, quiet and cool that I can keep it in a drawer of my desk, which due to me living in a small apartment is located in my bedroom.

As part of my backup regime, I have an old 2.5” USB drive connected to it for Time Machine. The problem is that often my Mac would wake up in the middle of the night to perform a backup. That is nice, but it also means that the drive would start grinding, sometimes waking me up or preventing me to get asleep in the first place.

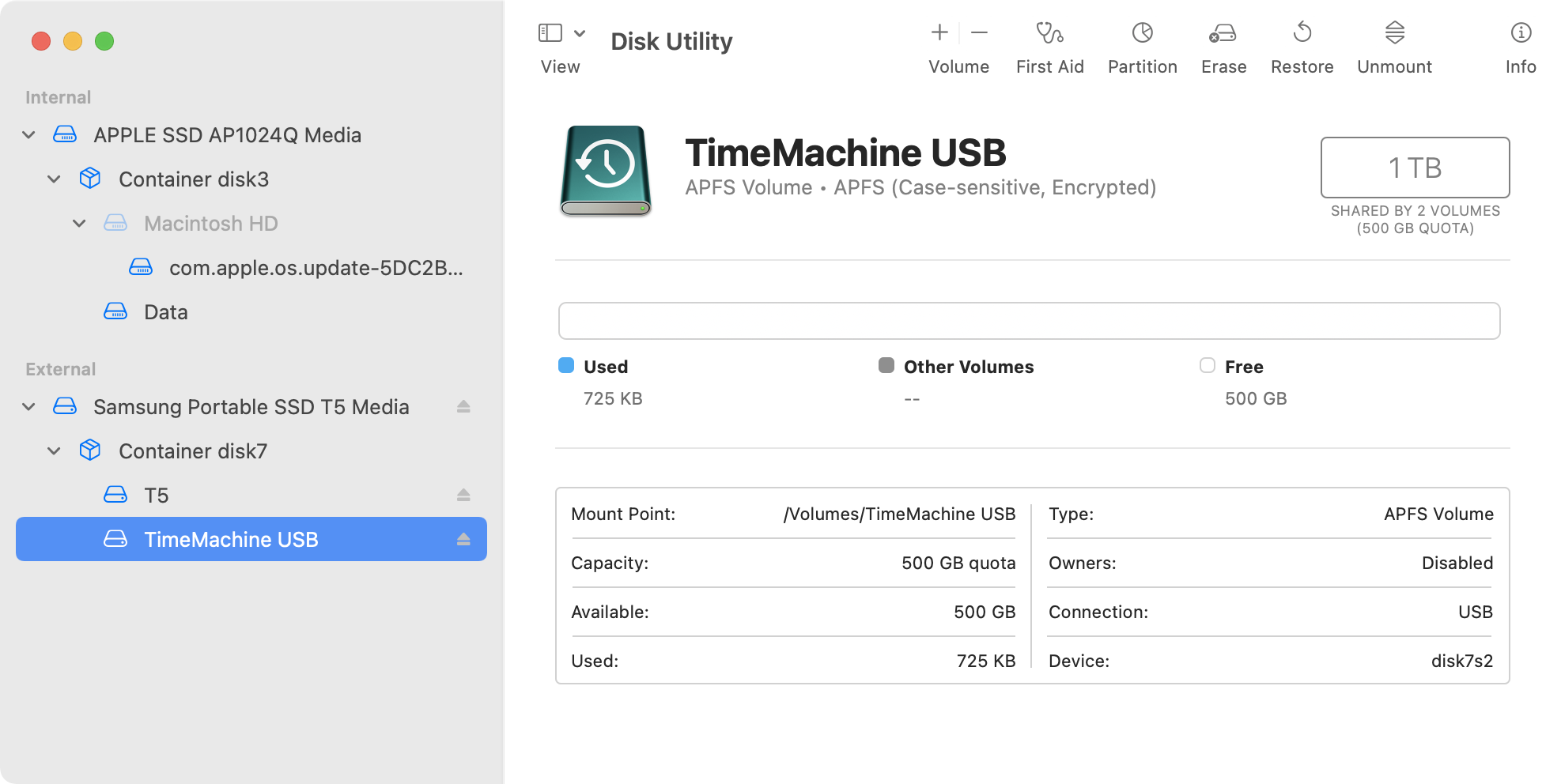

I was fed up with it, and I switched to a 1 TB SSD, which is totally silent, just like my Mac.

Having an entire drive dedicated to Time Machine only, though, was a bit excessive, and I wanted to be able to keep some files on it as well. I know I could just put them in the same APFS partition Time Machine is using, but given how finicky it can be at times, I preferred leaving it alone and resorting to a nice feature of APFS, which is having different volumes on the same disk. Volumes are not like partition, they are not fixed-size: they share all the available space on the drive. This way you cold have 10 volumes on a single 1 TB drive, and they all would see 1 TB of free space in the beginning.

That is all great, and you can easily add volumes to an APFS drive thorough Disk Utility, you just have to select the drive from the sidebar and click the + button on the tool bar on the right. You also get a handy feature which I wanted to enable for my Time Machine volume: the ability to set a quota for that volume, which is basically a hard limit on the size of that volume. I wanted to make sure Time Machine could use at most 500 GB of the 1 TB, while allowing my data volume to use all the available space if necessary.

The problem is that when I went to the Time Machine preference pane and selected my newly created volume as its target, it would reformat it and remove its quota, thus giving it the ability to expand to the whole drive. What’s worse, it even erased all the contents of my other volume, which I’m afraid is a bug.

The solution, as always, was to resort to the Terminal. I found my answer in this helpful Stack Exchange thread: you have to add the volume using the diskutil command and applying the T role, which stands for Time Machine and will avoid having to erase the volume after selecting it as the destination drive.

diskutil ap addvolume disk7 APFSX 'TimeMachine USB' -passprompt -passphraseHint 1Password -quota 500g -role 'T'

You will, of course, need to replace disk7 with your disk identifier (which you can find in Disk Utility or through the diskutil list command). As you can see I’m also encrypting my volume and I am setting an hint, but if you don’t need that and are fine with your backups sitting in the clear on your drive, you can omit the -passprompt and the -passphraseHint options.

Fix portainer-agent restart loop

For I while I’ve had two Docker hosts on my home network, one with Portainer installed and the other one with portainer-agent, to be able to have a single webinterface to manage both.

The one hosting Portainer has always had watchtower installed, in order to automate the upgrade of all containers, the second one did not. Once I realized it, I setup watchtower on it as well, and that’s when issues begun.

The issue

portainer-agent would enter a restart-loop, filling the logs with entries such as:

2021/10/31 07:53:21 [INFO] [main] [message: Agent running on Docker platform] 2021/10/31 07:53:21 [ERROR] [main,docker] [message: Unable to retrieve local agent IP address] [error: Error: No such container: 6d3437033dce]

The weird thing is that it was mentioning container 6d3437033dce, while portainer-agent was running as 9bf8b8a94d03.

I suspected it was due to something watchtower was doing when recreating containers after pulling the latest version.

A few searches later, I ended up finding this GitHub issue for portainer-agent, where jackman815 found that the issue was related to the way watchtower assigns the same hostname to the new containers.

I found the problem is about the watchtower, it clones the container configs included hostname when upgrading the container.

When upgrade/re-create the container, by default the docker daemon will assign a new hostname to the container and update internal dns, but the watchtower won’t update the container hostname after the container upgrade so the agent will try to look up the old hostname and it would be getting nxdomain result by docker internal dns.

The solution

As suggested in that issue, the solution is assigning a static hostname to the portainer-agent container.

To do so, stop and delete the old container, then re-create it with the --hostname option.

docker stop portainer_agent docker rm portainer_agent docker run -d -p 9001:9001 --name portainer_agent --hostname portainer_agent --restart=always -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/docker/volumes:/var/lib/docker/volumes portainer/agent:latest

Of course you’ll have to adjust the container name/hostname to match your setup.

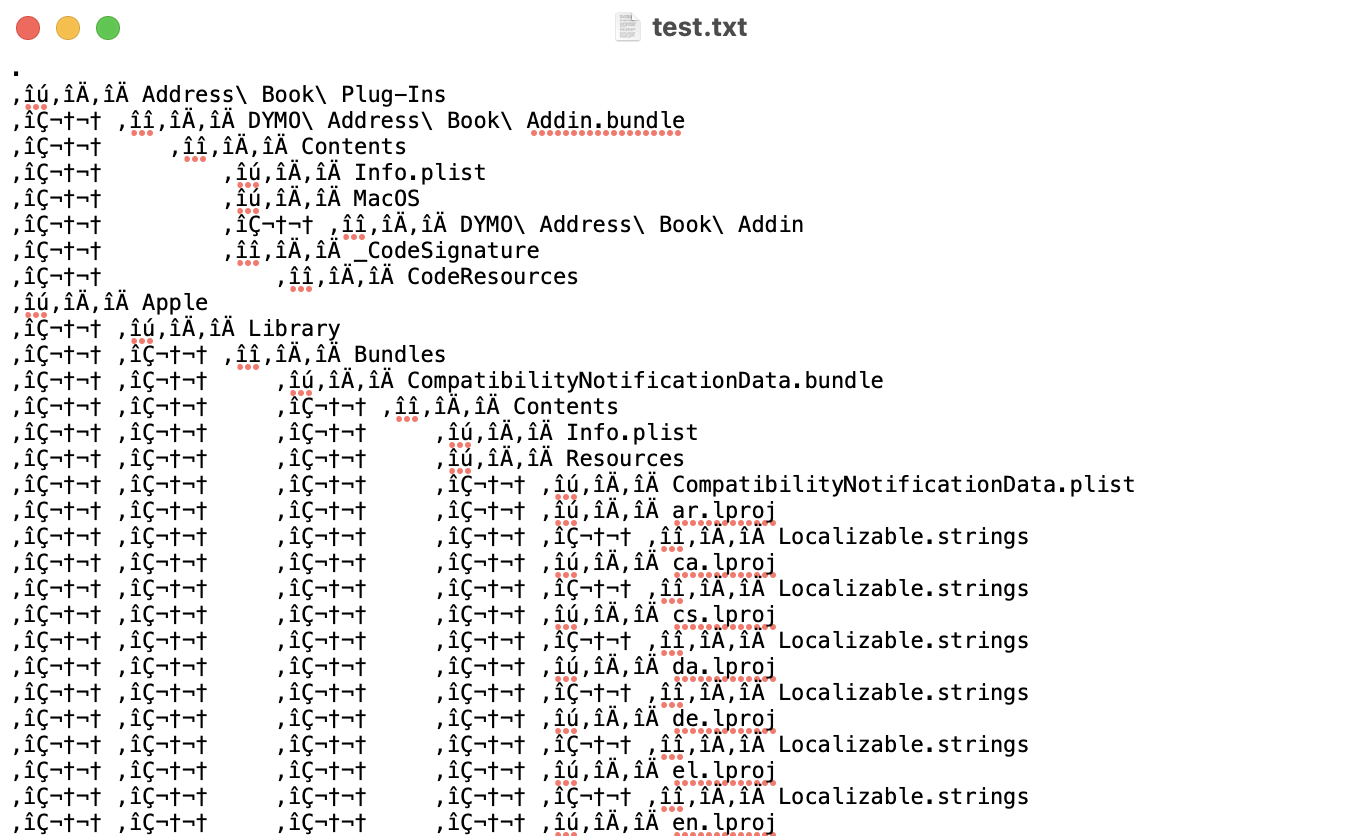

Recently I had the need to produce a text file containing the directory structure of a folder and all its content.

The tree command is just what I needed. A quick brew install tree installed it on my Mac.

One would think that it would just be a matter of running

tree /path/to/folder > /path/to/tree.txt

That’s true, for small directories (or those with no special characters?), for others I get this lovely crap:

Turns out the fix is pretty easy (altough you loose non ASCII characters in file names):

LC_ALL=C tree /path/to/folder > /path/to/tree.txt

Podcast chapters and Ableton Live

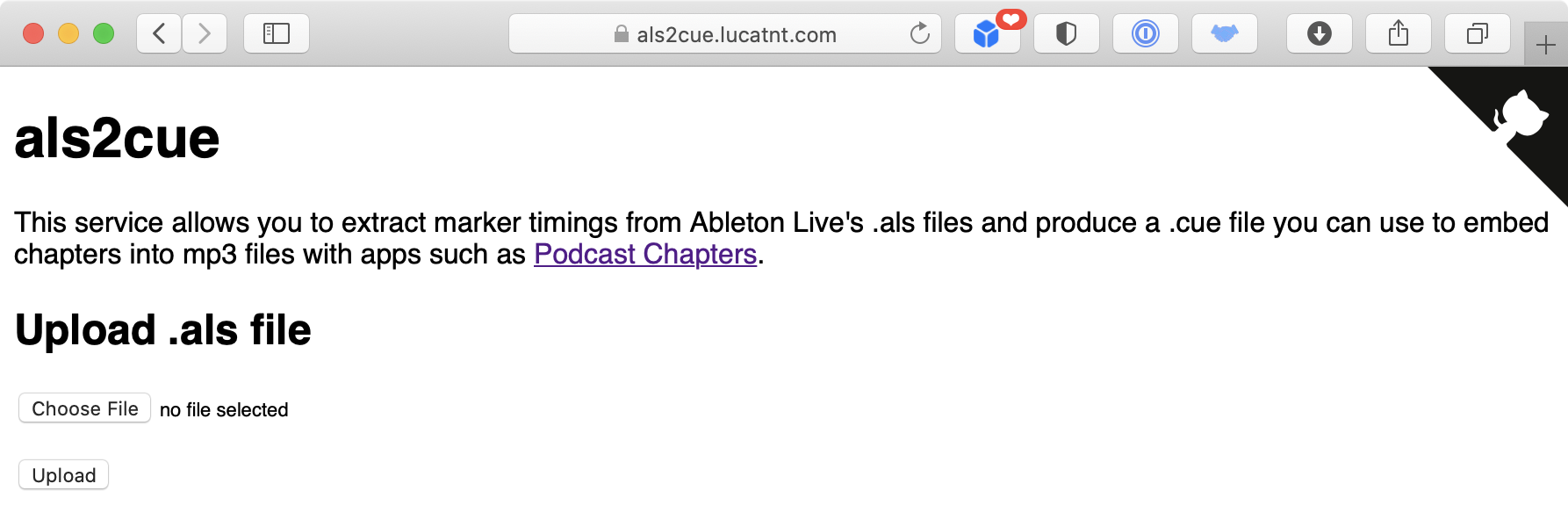

TL;DR Extract markers timestamps from Ableton Live .als project files into .cue files to generate podcast chapters with als2cue.

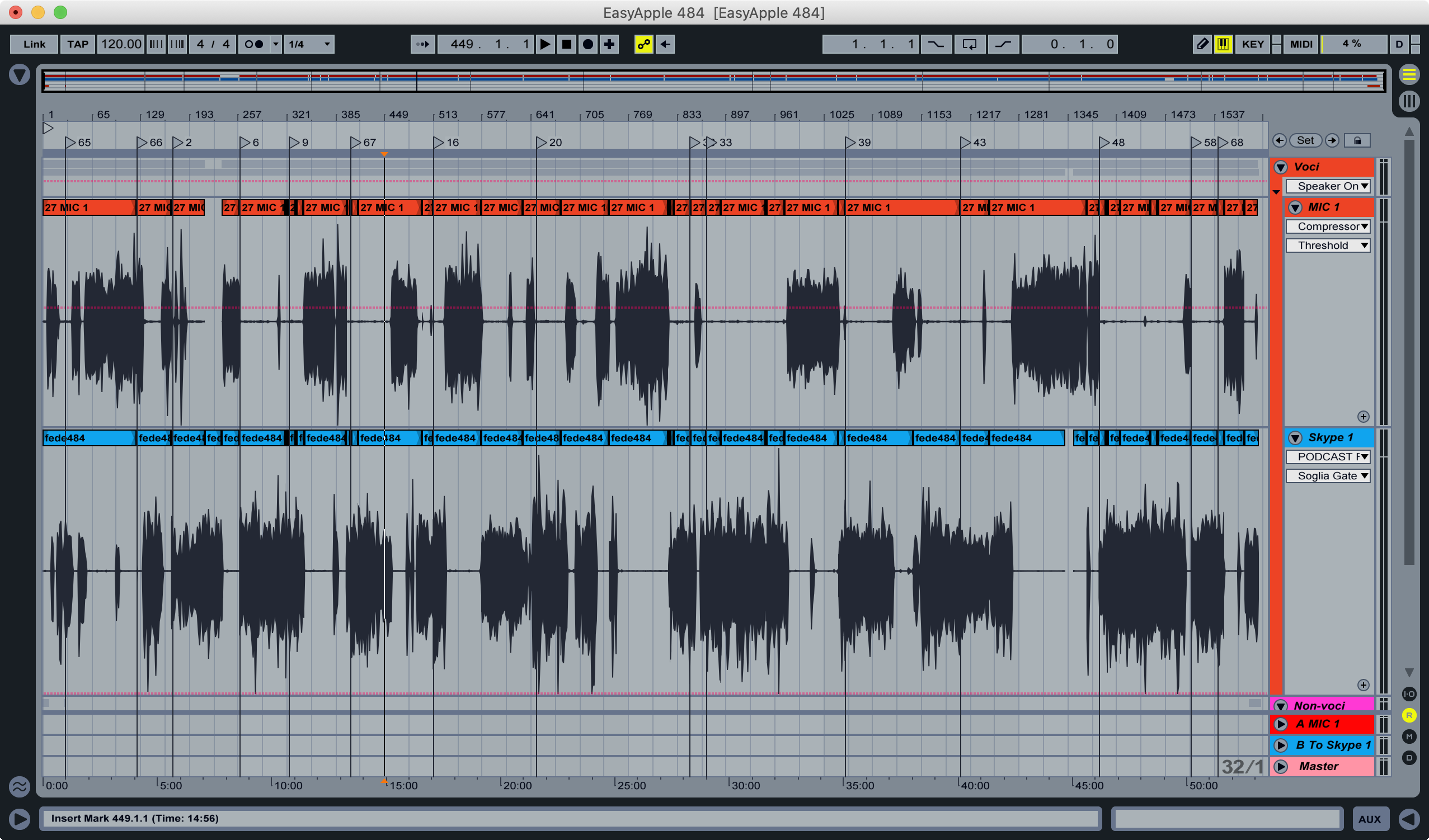

I’ve been recording and publishing podcasts for almost 10 years, and for most of the last decade I’ve been using Ableton Live to record and edit them. I was already familiar with Live from my “deejay period”, when I approached EDM music production, so it felt natural to keep using the tool I already knew.

The rise of podcast chapters

In the last few years, adding chapters to podcast files has become mainstream. It is very handy for listeners to jump around within an episode to reach the section they’re interested in. Heck, I even added automatic chapter listings to the CMS I developed for my podcast network (see an example in this episode, look at the “Capitoli” section).

But while it is great for listeners, the same cannot be said for podcasters. It requires significant effort to place and name chapters by hand, and I needed to automate the process a bit to make it doable without investing too much time in it.

Ableton Live Markers Locators

Ableton Live includes a great feature, Locators, to help mark sections of an arrangement. I add them while recording to mark roughly when we changed topics, then during editing I fine tune their location.

The thing is, when you export an .aiff file from Live, you don’t get the position of each marker in the file’s metadata like you do in Logic Pro, and my mp3 encoding app of choice, Forecast, cannot automatically insert chapter markers in the .mp3 file.

Extracting Locators from .als project files

Digging around, I found out that Ableton Live’s .als project files are basically just zipped xml files.

I then wrote a Python script that takes the .als file as input, and spits out a .cue file containing all the timestamps. Why a .cue file, you ask? That’s because Podcast Chapters, an amazing Mac app by Fredrik Björeman, supports .cue files to create chapters, which can then be named easily through its GUI.

It has worked great for me for more than a year, but it is not really user friendly.

My brother needed to edit an episode of another podcast, and it was just too cumbersome to have him run the Python script, so I decided to build a really simple web interface around my script: als2cue_web was born.

als2cue_web, who dis?

The web app is available for everyone to use at als2cue.lucatnt.com, it just asks you to upload a .als and returns a .cue file with each locator’s timestamp.

It is open source, and thus can be easily self hosted. It is available from my Github repo (pull requests welcome!) and as a ready-to-use Docker image, lucatnt/als2cue_web, you just need to expose port 80 of the container to a local port.

Error: unknown filesystem.

grub rescue>This was the nice error that greeted me when I tried to (re)boot my Proxmox server.

What unknown filesystem? The server was still supposed to boot from the same ZFS disk it always booted from.

TL;DR it was due to the large_dnode getting enabled on a dataset I had manually created. GRUB does not support that feature. Create a new dataset with that feature explicitly disabled, move the data to it, delete the original and rename the new one. Done.

Some background

Being space-constrained in my apartment, my Proxmox server is actually my everything server. It is my virtualization server, my router (it runs pfSense in a VM) and my NAS (it runs Samba in an LXC container).

For NAS duties, I decided a while back to create a dedicated dataset rpool/nas, which I then mounted in the Samba container. Without much thinking, I just ran a simple:

zfs create rpool/nas

Due to ZFS defaults, it had the feature dnodesize set to auto. It was never an issue, until the other day.

What happened

Some file must have triggered a non-legacy (512 bytes) dnode size in the dataset, which meant that GRUB could no longer read the drive.

I came to that conclusion by installing Proxmox in a VM on my Mac (with an ext4 boot drive, to avoid having to rename the pool), and attaching the server’s SSD through a SATA-USB adapter.

I changed the mountpoint of my server’s boot partition from / to /recovery, mounted /dev /sys and /proc in there and then chrooted into /recovery:

zfs set mountpoint=/recovery rpool/ROOT/pve-1 mount --rbind /dev /recovery/dev mount --rbind /sys /recovery/sys mount -t proc /proc /recovery/proc chroot /recovery

I ran grub-probe -vvvv / to get some insight on why GRUB was failing and one line was interesting:

grub-core / fs / zfs / zfs.c: 2112: zap: name = org.zfsonlinux: large_dnode, value = 1, cd = 0

I read a bit online and I found out about the dnodesize thing. I usually like to link back to all the places that were useful to find a solution to any problem I blog about, but this time it was just too difficult to keep track of everything, except for this discussion on the german Proxmox forums.

The solution

I ran zfs get -r dnodesize rpool to get a sense of what were the different dnodesize values for all the datasets in the pool. They were all set to legacy, except for my rpool/nas dataset.

So I made a new dataset for my NAS data with dnodesize explicitly set to legacy, then I rsync’d everything from the old dataset into the new one, destroyed the old dataset and renamed the new one.

zfs create -o dnodesize=legacy rpool/nas2 rsync -av --progress /rpool/nas/ /rpool/nas2/ zfs destroy rpool/nas zfs rename rpool/nas2 rpool/nas

Last step: moving back the mountpoint for `rpool/ROOT/pve-1`:

zfs set mountpoint=/rpool/ROOT/pve-1

And then I moved the SSD back into my server.

That’s it. It just took an afternoon of cursing.

Moving Proxmox ZFS boot drive to a new disk

When I assembled my little Proxmox home server, mainly used for pfSense, Home Assistant, Nextcloud and a few other apps, I underestimated the amount of storage I needed. I went with a cheap 120 GB SSD, but it was pretty much always full. I then found a deal on a 960 GB Kingston A400 SSD, so I got it.

The thing is I didn’t really want to go through a complete reinstall of Proxmox, restore of my VMs/CTs and reinstallation of Zabbix Agent on the host itself. Thankfully, my whole drive is ZFS formatted, so I have access to the great zfs send and zfs receive commands to move stuff around.

The steps

- Connect the SSD with a USB-SATA adapter to a Virtual Machine running on my Mac, and install Proxmox on it. This takes care of the GRUB bootloader.

- On the main Proxmox install, shutdown all CTs and VMs, and take a snapshot

- Connect the drive to Proxmox and import the pool with a different name

- ZFS send/receive the snapshot (recursively) to the new pool

- Export the pool

- Shutdown Proxmox and swap the drives

- Power on Proxmox, fix the pool name, reboot

- Fix the bootloader and initial ramdisk

- Profit (?)

1. Proxmox install on the new SSD

So the first step is to install Proxmox on the new SSD, and the easiest thing I could think was to use a simple USB3 to SATA adapter to connect it to my Mac, and then pass it to a VM in Parallels with the Proxmox ISO mounted. I then proceded with a regular install, choosing ZFS as the SSD’s filesystem. I could have done it all on a VM on the Proxmox server, but I didn’t bother.

2. Snapshot the old drive

Then I moved to the Proxmox server, shut down every VM and every CT, and took a recursive snapshot of the main pool (rpool is the default pool name on Proxmox):

sudo zfs snapshot -r rpool@newSSD

3. Connect the new SSD to Proxmox

After shutting down the VM I used to install Proxmox on the new SSD, I moved the USB3-SATA adapter to the Proxmox server.

First I needed to import the pool with a new name (rpoolUSB), since of course rpool was already taken.

sudo zpool import -d /dev

sudo zpool import [ID-OF-THE-POOL-ON-THE-NEW-SSD] rpoolUSB

4. Clone the old SSD onto the NEW one

Having just taken the snapshot on the old drive, It was just a metter of a ZFS send/receive, with the -F to overwrite the pool. This operation left the bootloader intact, which is great.

sudo zfs send -R rpool@newSSD | sudo zfs recv -F rpoolUSB

5. Export the new pool

sudo zpool export rpoolUSB

6. Shutdown Proxmox and swap the drives

Connect a display to your Proxmox server if you don’t have one, or connect through KVM if your server has IPMI capabilities.

7. Fix the pool name

Remember how we renamed the pool to rpoolUSB in step 3? Proxmox doesn’t like that. Or rather, it doesn’t know about that. So the boot process with fail leaving you at a Busybox shell. Just import the pool giving it the usual rpool name and exit.

zpool import -d /dev

zpool import rpoolUSB rpool

exit

8. Fix the bootloader and initial ramdisk

The boot process now works fine, but it complains about some missing things. What’s needed is a fix of the initial ramdisk and possibly of the GRUB bootloader, I did both just to be on the safe side.

sudo update-grub2

sudo update-initramfs -u -k all

9. Profit

Let me know if you actually profited from this. I think you owe me 1% of your profits 😁